import spacy

nlp = spacy.load("en_core_web_sm")

text = """The United States of America (U.S.A. or USA), commonly known as the United States (U.S. or US) or America, is a country primarily located in North America. It consists of 50 states, a federal district, five major unincorporated territories, 326 Indian reservations, and some minor possessions.[j] At 3.8 million square miles (9.8 million square kilometers), it is the world's third- or fourth-largest country by total area.[d] The United States shares significant land borders with Canada to the north and Mexico to the south, as well as limited maritime borders with the Bahamas, Cuba, and Russia. With a population of more than 331 million people, it is the third most populous country in the world. The national capital is Washington, D.C., and the most populous city is New York."""

doc = nlp(text)14 spaCy使用

14.1 安装

pip install spacy安装语言模型

python -m spacy download en_core_web_sm

python -m spacy download zh_core_web_sm

python -m spacy download en_core_web_lg

python -m spacy download zh_core_web_lg或者先下载具体的模型再安装,再安装model。

spaCy model官方的下载地址: https://github.com/explosion/spacy-models/tags

或者采用我缓存的模型,速度会比较快:

- 英文模型下载 en_core_web_lg-3.5.0.tar.gz

- 中文模型下载 zh_core_web_lg-3.5.0.tar.gz

pip install ./en_core_web_lg-3.5.0.tar.gz

pip install ./zh_core_web_lg-3.5.0.tar.gz14.1.1 Run spaCy with GPU

如果电脑有GPU,可以通过GPU加速计算:

pip install -U spacy[cuda113]import spacy

spacy.prefer_gpu()

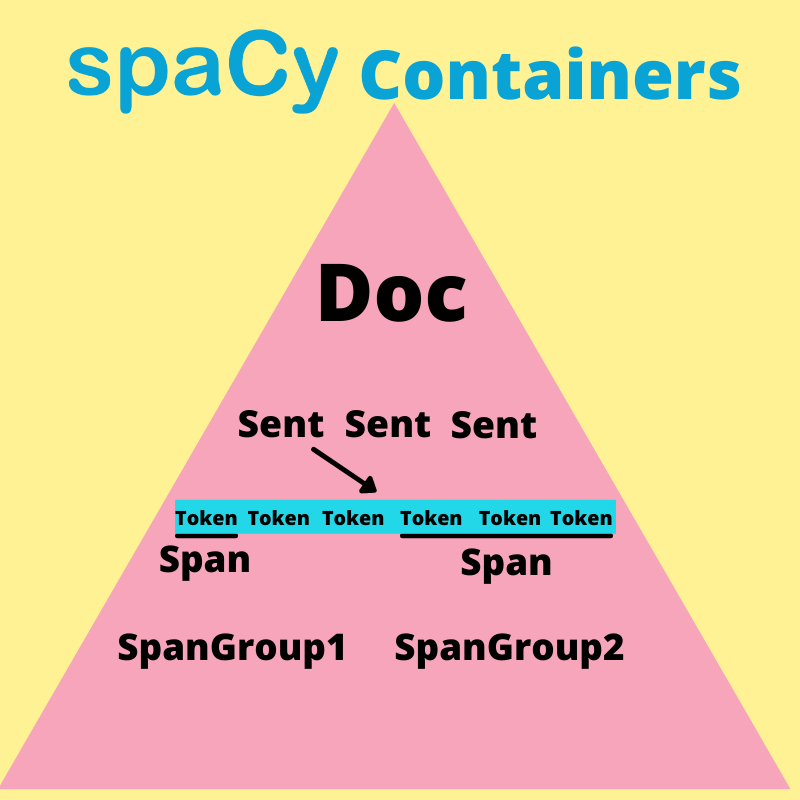

nlp = spacy.load("en_core_web_sm")14.2 Doc Container

14.2.1 token

for i, token in enumerate(doc[:15]):

print(i, token, sep="\t")0 The

1 United

2 States

3 of

4 America

5 (

6 U.S.A.

7 or

8 USA

9 )

10 ,

11 commonly

12 known

13 as

14 thefor i, token in enumerate(text[:15]):

print(i, token, sep="\t")0 T

1 h

2 e

3

4 U

5 n

6 i

7 t

8 e

9 d

10

11 S

12 t

13 a

14 tfor i, token in enumerate(text.split()[:15]):

print(i, token, sep="\t")0 The

1 United

2 States

3 of

4 America

5 (U.S.A.

6 or

7 USA),

8 commonly

9 known

10 as

11 the

12 United

13 States

14 (U.S.14.2.2 sentences

for sent in doc.sents:

print(sent, end="\n\n")The United States of America (U.S.A. or USA), commonly known as the United States (U.S. or US) or America, is a country primarily located in North America.

It consists of 50 states, a federal district, five major unincorporated territories, 326 Indian reservations, and some minor possessions.[j]

At 3.8 million square miles (9.8 million square kilometers), it is the world's third- or fourth-largest country by total area.[d]

The United States shares significant land borders with Canada to the north and Mexico to the south, as well as limited maritime borders with the Bahamas, Cuba, and Russia.

With a population of more than 331 million people, it is the third most populous country in the world.

The national capital is Washington, D.C., and the most populous city is New York.

doc.sents attribute is a generator. In python, we can usually iterate over generators by converting them into a list.

sentence1 = list(doc.sents)[0]

print (sentence1)The United States of America (U.S.A. or USA), commonly known as the United States (U.S. or US) or America, is a country primarily located in North America.14.2.3 Token Attributes

The token object contains a lot of different attributes that are VITAL do performing NLP in spaCy. We will be working with a few of them, such as:

- .text

- .head

- .left_edge

- .right_edge

- .ent_type_

- .iob_

- .lemma_

- .morph

- .pos_

- .dep_

- .lang_

token attributes in spaCy: https://spacy.io/api/attributes

token = sentence1[2]

print(token)

print(" 1", token.text)

print(" 2", token.ent_type_)

print(" 3", token.lemma_)

print(" 4", token.is_alpha)

print(" 5", token.is_space)

print(" 6", token.is_stop)

print(" 7", token.is_punct)

print(" 8", token.is_currency)

print(" 9", token.is_upper)

print("10", token.pos_) # Part of SpeechStates

1 States

2 GPE

3 States

4 True

5 False

6 False

7 False

8 False

9 False

10 PROPN14.2.4 Part of Speech Tagging (POS)

In the field of computational linguistics, understanding parts-of-speech is essential. SpaCy offers an easy way to parse a text and identify its parts of speech. Below, we will iterate across each token (word or punctuation) in the text and identify its part of speech.

for token in sentence1:

print (token.text, token.pos_, token.dep_, sep="\t")The DET det

United PROPN compound

States PROPN nsubj

of ADP prep

America PROPN pobj

( PUNCT punct

U.S.A. PROPN appos

or CCONJ cc

USA PROPN conj

) PUNCT punct

, PUNCT punct

commonly ADV advmod

known VERB acl

as ADP prep

the DET det

United PROPN compound

States PROPN pobj

( PUNCT punct

U.S. PROPN appos

or CCONJ cc

US PROPN conj

) PUNCT punct

or CCONJ cc

America PROPN conj

, PUNCT punct

is AUX ROOT

a DET det

country NOUN attr

primarily ADV advmod

located VERB acl

in ADP prep

North PROPN compound

America PROPN pobj

. PUNCT punctfrom spacy import displacy

displacy.render(sentence1, style="dep")14.2.5 Named Entity Recognition¶

Another essential task of NLP, is named entity recognition, or NER. I spoke about NER in the last notebook. Here, I’d like to demonstrate how to perform basic NER via spaCy. Again, we will iterate over the doc object as we did above, but instead of iterating over doc.sents, we will iterate over doc.ents. For our purposes right now, I simply want to print off each entity’s text (the string itself) and its corresponding label (note the _ after label). I will be explaining this process in much greater detail in the next two notebooks.

for ent in doc.ents:

print (ent.text, ent.label_)The United States of America GPE

U.S.A. GPE

USA GPE

the United States GPE

U.S. GPE

US GPE

America GPE

North America LOC

50 CARDINAL

five CARDINAL

326 CARDINAL

Indian NORP

3.8 million square miles QUANTITY

9.8 million square kilometers QUANTITY

fourth ORDINAL

The United States GPE

Canada GPE

Mexico GPE

Bahamas GPE

Cuba GPE

Russia GPE

more than 331 million CARDINAL

third ORDINAL

Washington GPE

D.C. GPE

New York GPE| Type | Description |

|---|---|

| PERSON | People, including fictional. |

| NORP | Nationalities or religious or political groups. |

| FAC | Buildings, airports, highways, bridges, etc. |

| ORG | Companies, agencies, institutions, etc. |

| GPE | Countries, cities, states. |

| LOC | Non-GPE locations, mountain ranges, bodies of water. |

| PRODUCT | Objects, vehicles, foods, etc. (Not services.) |

| EVENT | Named hurricanes, battles, wars, sports events, etc. |

| WORK_OF_ART | Titles of books, songs, etc. |

| LAW | Named documents made into laws. |

| LANGUAGE | Any named language. |

| DATE | Absolute or relative dates or periods. |

| TIME | Times smaller than a day. |

| PERCENT | Percentage, including “%”. |

| MONEY | Monetary values, including unit. |

| QUANTITY | Measurements, as of weight or distance. |

| ORDINAL | “first”, “second”, etc. |

| CARDINAL | Numerals that do not fall under another type. |

We can use the spacy.explain() on all entities for one example.

for ent in doc.ents:

print(f'Entity: {ent}, Label: {ent.label_}, {spacy.explain(ent.label_)}')Entity: The United States of America, Label: GPE, Countries, cities, states

Entity: U.S.A., Label: GPE, Countries, cities, states

Entity: USA, Label: GPE, Countries, cities, states

Entity: the United States, Label: GPE, Countries, cities, states

Entity: U.S., Label: GPE, Countries, cities, states

Entity: US, Label: GPE, Countries, cities, states

Entity: America, Label: GPE, Countries, cities, states

Entity: North America, Label: LOC, Non-GPE locations, mountain ranges, bodies of water

Entity: 50, Label: CARDINAL, Numerals that do not fall under another type

Entity: five, Label: CARDINAL, Numerals that do not fall under another type

Entity: 326, Label: CARDINAL, Numerals that do not fall under another type

Entity: Indian, Label: NORP, Nationalities or religious or political groups

Entity: 3.8 million square miles, Label: QUANTITY, Measurements, as of weight or distance

Entity: 9.8 million square kilometers, Label: QUANTITY, Measurements, as of weight or distance

Entity: fourth, Label: ORDINAL, "first", "second", etc.

Entity: The United States, Label: GPE, Countries, cities, states

Entity: Canada, Label: GPE, Countries, cities, states

Entity: Mexico, Label: GPE, Countries, cities, states

Entity: Bahamas, Label: GPE, Countries, cities, states

Entity: Cuba, Label: GPE, Countries, cities, states

Entity: Russia, Label: GPE, Countries, cities, states

Entity: more than 331 million, Label: CARDINAL, Numerals that do not fall under another type

Entity: third, Label: ORDINAL, "first", "second", etc.

Entity: Washington, Label: GPE, Countries, cities, states

Entity: D.C., Label: GPE, Countries, cities, states

Entity: New York, Label: GPE, Countries, cities, statesdisplacy.render(doc, style="ent")14.2.6 noun phrases

for noun_chunk in doc.noun_chunks:

# Print noun chunk

print(noun_chunk)The United States

America

U.S.A.

USA

the United States

U.S.

US

America

a country

North America

It

50 states

a federal district

five major unincorporated territories

326 Indian reservations

3.8 million square miles

9.8 million square kilometers

it

the world's third- or fourth-largest country

total area.[d

The United States

significant land borders

Canada

the north

Mexico

the south

limited maritime borders

the Bahamas

Cuba

Russia

a population

more than 331 million people

it

the third most populous country

the world

The national capital

Washington

D.C.

the most populous city

New York14.3 Standard Pipes from spaCy

https://spacy.io/api#architecture-pipeline

14.3.1 add pipeline example

nlp = spacy.blank("en")

nlp.add_pipe("sentencizer")<spacy.pipeline.sentencizer.Sentencizer at 0x1dc1fa3ed00>%%timeit

doc = nlp(text)

len(list(doc.sents))170 µs ± 49.2 µs per loop (mean ± std. dev. of 7 runs, 10,000 loops each)nlp2 = spacy.load("en_core_web_sm")%%timeit

doc = nlp2(text)

len(list(doc.sents))24.1 ms ± 409 µs per loop (mean ± std. dev. of 7 runs, 10 loops each)nlp.pipeline[('sentencizer', <spacy.pipeline.sentencizer.Sentencizer at 0x1dc1fa3ed00>)]nlp2.pipeline[('tok2vec', <spacy.pipeline.tok2vec.Tok2Vec at 0x1dc1f8f3100>),

('tagger', <spacy.pipeline.tagger.Tagger at 0x1dc1f8f32e0>),

('parser', <spacy.pipeline.dep_parser.DependencyParser at 0x1dc236d5c80>),

('attribute_ruler',

<spacy.pipeline.attributeruler.AttributeRuler at 0x1dc1f9f6980>),

('lemmatizer', <spacy.lang.en.lemmatizer.EnglishLemmatizer at 0x1dc1fa0e7c0>),

('ner', <spacy.pipeline.ner.EntityRecognizer at 0x1dc1b776cf0>)]14.3.2 customize pipeline

# Load a small language model for English, but exclude named entity

# recognition ('ner') and syntactic dependency parsing ('parser').

nlp = spacy.load('en_core_web_sm', exclude=['ner', 'parser'])# Examine the active components under the Language object 'nlp'

nlp.pipeline[('tok2vec', <spacy.pipeline.tok2vec.Tok2Vec at 0x1dc21cb4a00>),

('tagger', <spacy.pipeline.tagger.Tagger at 0x1dc238acee0>),

('attribute_ruler',

<spacy.pipeline.attributeruler.AttributeRuler at 0x1dc25aaec80>),

('lemmatizer', <spacy.lang.en.lemmatizer.EnglishLemmatizer at 0x1dc25ac5500>)]# Analyse the pipeline and store the analysis under 'pipe_analysis'

pipe_analysis = nlp.analyze_pipes(pretty=True)

============================= Pipeline Overview =============================

# Component Assigns Requires Scores Retokenizes

- --------------- ----------- -------- --------- -----------

0 tok2vec doc.tensor False

1 tagger token.tag tag_acc False

2 attribute_ruler False

3 lemmatizer token.lemma lemma_acc False

✔ No problems found.14.3.3 Merging noun phrases and named entities

import spacy

nlp = spacy.load("en_core_web_sm")

nlp.add_pipe('merge_noun_chunks')

doc = nlp(text)nlp.pipeline[('tok2vec', <spacy.pipeline.tok2vec.Tok2Vec at 0x1dc22fad400>),

('tagger', <spacy.pipeline.tagger.Tagger at 0x1dc22fadfa0>),

('parser', <spacy.pipeline.dep_parser.DependencyParser at 0x1dc26880d60>),

('attribute_ruler',

<spacy.pipeline.attributeruler.AttributeRuler at 0x1dc280c4b00>),

('lemmatizer', <spacy.lang.en.lemmatizer.EnglishLemmatizer at 0x1dc280d21c0>),

('ner', <spacy.pipeline.ner.EntityRecognizer at 0x1dc26880ba0>),

('merge_noun_chunks',

<function spacy.pipeline.functions.merge_noun_chunks(doc: spacy.tokens.doc.Doc) -> spacy.tokens.doc.Doc>)]for i, token in enumerate(doc[:10]):

print(i, token, sep="\t")0 The United States

1 of

2 America

3 (

4 U.S.A.

5 or

6 USA

7 )

8 ,

9 commonlynlp.remove_pipe('merge_noun_chunks')

nlp.add_pipe('merge_entities')

nlp.pipeline[('tok2vec', <spacy.pipeline.tok2vec.Tok2Vec at 0x1dc22fad400>),

('tagger', <spacy.pipeline.tagger.Tagger at 0x1dc22fadfa0>),

('parser', <spacy.pipeline.dep_parser.DependencyParser at 0x1dc26880d60>),

('attribute_ruler',

<spacy.pipeline.attributeruler.AttributeRuler at 0x1dc280c4b00>),

('lemmatizer', <spacy.lang.en.lemmatizer.EnglishLemmatizer at 0x1dc280d21c0>),

('ner', <spacy.pipeline.ner.EntityRecognizer at 0x1dc26880ba0>),

('merge_entities',

<function spacy.pipeline.functions.merge_entities(doc: spacy.tokens.doc.Doc)>)]doc = nlp(text)

for i, token in enumerate(doc[:10]):

print(i, token, sep="\t")0 The United States of America

1 (

2 U.S.A.

3 or

4 USA

5 )

6 ,

7 commonly

8 known

9 as14.4 DTM construction with spaCy

# Create list

examples = ["Helsinki is the capital of Finland",

"Tallinn is the capital of Estonia",

"The two capitals are joined by a ferry connection",

"Travelling between Helsinki and Tallinn takes about two hours",

"Ferries depart from downtown Helsinki and Tallinn"]

docs = list(nlp.pipe(examples))LEMMA is a spaCy object that refers to this particular linguistic feature, which we can pass to the count_by() method of a Doc object to instruct spaCy to count these linguistic features.

from spacy.attrs import LEMMA

lemma_counts = {i: doc.count_by(LEMMA) for i, doc in enumerate(docs)}

lemma_counts{0: {332692160570289739: 1,

10382539506755952630: 1,

7425985699627899538: 1,

15481038060779608540: 1,

886050111519832510: 1,

4881666681900411319: 1},

1: {7392857733388117912: 1,

10382539506755952630: 1,

7425985699627899538: 1,

15481038060779608540: 1,

886050111519832510: 1,

15428882767191480669: 1},

2: {7425985699627899538: 1,

11711838292424000352: 1,

15481038060779608540: 1,

10382539506755952630: 1,

16238441731120403936: 1,

16764210730586636600: 1,

11901859001352538922: 1,

16008623592554433546: 1,

14753437861310164020: 1},

3: {9016120516514741834: 1,

7508752285157982505: 1,

332692160570289739: 1,

2283656566040971221: 1,

7392857733388117912: 1,

6789454535283781228: 1,

883782512640661246: 1},

4: {16008623592554433546: 1,

11568774473013387390: 1,

7831658034963690409: 1,

18137549281339502438: 1,

332692160570289739: 1,

2283656566040971221: 1,

7392857733388117912: 1}}nlp.vocab[332692160570289739].text'Helsinki'lemma_counts = {i: {docs[i].vocab[k].text: v for k, v in counter.items()}

for i, counter in lemma_counts.items()}import pandas as pd

df = pd.DataFrame.from_dict(lemma_counts).sort_index(ascending=True)

df = df.fillna(0).T

df| Estonia | Finland | Helsinki | Tallinn | a | about two hour | and | be | between | by | ... | depart | downtown | ferry | from | join | of | take | the | travel | two | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 1.0 | 0.0 | 0.0 |

| 1 | 1.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 1.0 | 0.0 | 0.0 |

| 2 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 1.0 | 0.0 | 1.0 | ... | 0.0 | 0.0 | 1.0 | 0.0 | 1.0 | 0.0 | 0.0 | 1.0 | 0.0 | 1.0 |

| 3 | 0.0 | 0.0 | 1.0 | 1.0 | 0.0 | 1.0 | 1.0 | 0.0 | 1.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 1.0 | 0.0 |

| 4 | 0.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | ... | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

5 rows × 22 columns

14.4.1 manipulate data with pandas

word_df = df.stack().reset_index(). \

rename(columns = {'level_0': "id", "level_1":"word", 0: "N"}). \

sort_values(by=['id', "N"])

word_df| id | word | N | |

|---|---|---|---|

| 0 | 0 | Estonia | 0.0 |

| 3 | 0 | Tallinn | 0.0 |

| 4 | 0 | a | 0.0 |

| 5 | 0 | about two hour | 0.0 |

| 6 | 0 | and | 0.0 |

| ... | ... | ... | ... |

| 94 | 4 | and | 1.0 |

| 100 | 4 | depart | 1.0 |

| 101 | 4 | downtown | 1.0 |

| 102 | 4 | ferry | 1.0 |

| 103 | 4 | from | 1.0 |

110 rows × 3 columns

word_df.query('N > 0')| id | word | N | |

|---|---|---|---|

| 1 | 0 | Finland | 1.0 |

| 2 | 0 | Helsinki | 1.0 |

| 7 | 0 | be | 1.0 |

| 10 | 0 | capital | 1.0 |

| 17 | 0 | of | 1.0 |

| 19 | 0 | the | 1.0 |

| 22 | 1 | Estonia | 1.0 |

| 25 | 1 | Tallinn | 1.0 |

| 29 | 1 | be | 1.0 |

| 32 | 1 | capital | 1.0 |

| 39 | 1 | of | 1.0 |

| 41 | 1 | the | 1.0 |

| 48 | 2 | a | 1.0 |

| 51 | 2 | be | 1.0 |

| 53 | 2 | by | 1.0 |

| 54 | 2 | capital | 1.0 |

| 55 | 2 | connection | 1.0 |

| 58 | 2 | ferry | 1.0 |

| 60 | 2 | join | 1.0 |

| 63 | 2 | the | 1.0 |

| 65 | 2 | two | 1.0 |

| 68 | 3 | Helsinki | 1.0 |

| 69 | 3 | Tallinn | 1.0 |

| 71 | 3 | about two hour | 1.0 |

| 72 | 3 | and | 1.0 |

| 74 | 3 | between | 1.0 |

| 84 | 3 | take | 1.0 |

| 86 | 3 | travel | 1.0 |

| 90 | 4 | Helsinki | 1.0 |

| 91 | 4 | Tallinn | 1.0 |

| 94 | 4 | and | 1.0 |

| 100 | 4 | depart | 1.0 |

| 101 | 4 | downtown | 1.0 |

| 102 | 4 | ferry | 1.0 |

| 103 | 4 | from | 1.0 |

14.4.2 wordcloud

conda install -c conda-forge wordcloud

pip install stylecloudimport matplotlib

import matplotlib.pyplot as plt

%matplotlib inline

%config InlineBackend.figure_format = 'svg'import random

worddic = {row.word: row.N + random.randint(5, 15) for row in word_df.itertuples()}from wordcloud import WordCloud

wordcloud = WordCloud(

background_color="white", max_words=100,

max_font_size=250, random_state=42, width=1000,

height=800, margin=2).generate_from_frequencies(worddic)plt.imshow(wordcloud, interpolation='bilinear')

plt.axis("off")

plt.show()import stylecloud

#def gen_stylecloud(text=None,

# file_path=None, # 输入文本/CSV 的文件路径

# size=512, # stylecloud 的大小(长度和宽度)

# icon_name='fas fa-flag', # stylecloud 形状的图标名称(如 fas fa-grin)

# palette='cartocolors.qualitative.Bold_5', # 调色板(通过 palettable 实现)

# colors=None,

# background_color="white", # 背景颜色

# max_font_size=200, # stylecloud 中的最大字号

# max_words=2000, # stylecloud 可包含的最大单词数

# stopwords=True, # 布尔值,用于筛除常见禁用词

# custom_stopwords=STOPWORDS,

# icon_dir='.temp',

# output_name='stylecloud.png', # stylecloud 的输出文本名

# gradient=None, # 梯度方向

# font_path=os.path.join(STATIC_PATH,'Staatliches-Regular.ttf'), # stylecloud 所用字体

# random_state=None, # 控制单词和颜色的随机状态

# collocations=True,

# invert_mask=False,

# pro_icon_path=None,

# pro_css_path=None)stylecloud.gen_stylecloud(text = text,

output_name='data/cloud-temp.png',

size=500)

Font-awesome for shape: https://fontawesome.com/v4.7.0/icons/ Google Font: https://fonts.google.com/

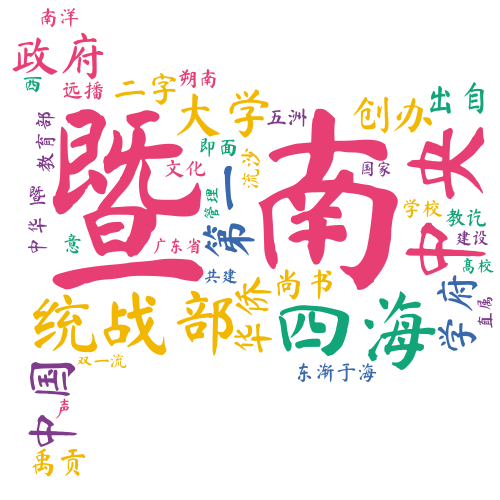

import spacy

nlp = spacy.load('zh_core_web_trf')

doc = nlp('暨南大学是中国第一所由政府创办的华侨学府。“暨南”二字出自《尚书·禹贡》:“东渐于海,西被于流沙,朔南暨,声教讫于四海。”意即面向南洋,将中华文化远播到五洲四海。学校目前是中央统战部、教育部、广东省共建的国家“双一流”建设高校,直属中央统战部管理。')

doc = " ".join([token.text for token in doc if not token.is_stop])

print(doc)暨南 大学 中国 第一 政府 创办 华侨 学府 暨南 二字 出自 尚书·禹贡 东渐于海 西 流沙 朔南 暨 声 教讫 四海 意 即面 南洋 中华 文化 远播 五洲 四海 学校 中央 统战部 教育部 广东省 共建 国家 双一流 建设 高校 直属 中央 统战部 管理stylecloud.gen_stylecloud(

text = doc,

font_path = "data/MaShanZheng-Regular.ttf",

output_name='data/cloud-temp2.png',

size=500)

stylecloud.gen_stylecloud(

text = doc,

font_path = "data/MaShanZheng-Regular.ttf",

output_name='data/cloud-temp3.png',

icon_name='fas fa-heart',

size=500)

14.4.3 similarity matrix

from sklearn.metrics.pairwise import cosine_similarity

# Evaluate cosine similarity between vectors

sim = cosine_similarity(df.values)

simarray([[1. , 0.66666667, 0.40824829, 0.15430335, 0.15430335],

[0.66666667, 1. , 0.40824829, 0.15430335, 0.15430335],

[0.40824829, 0.40824829, 1. , 0. , 0.12598816],

[0.15430335, 0.15430335, 0. , 1. , 0.42857143],

[0.15430335, 0.15430335, 0.12598816, 0.42857143, 1. ]])14.5 token/Doc Similarity

a = docs[0][0]

b = docs[0][-1]a.similarity(b)C:\Users\xinlu\AppData\Local\Temp\ipykernel_40628\3344546738.py:1: UserWarning:

[W007] The model you're using has no word vectors loaded, so the result of the Token.similarity method will be based on the tagger, parser and NER, which may not give useful similarity judgements. This may happen if you're using one of the small models, e.g. `en_core_web_sm`, which don't ship with word vectors and only use context-sensitive tensors. You can always add your own word vectors, or use one of the larger models instead if available.

0.22695933282375336In spaCy we can do this same thing at the document level. Through word vectors we can calculate the similarity between two documents. Let’s look at the example from spaCy’s documentation.

nlp = spacy.load('en_core_web_lg') # make sure to use larger package!

doc1 = nlp("I like salty fries and hamburgers.")

doc2 = nlp("Fast food tastes very good.")

# Similarity of two documents

print(doc1, doc2, doc1.similarity(doc2), sep='\n')I like salty fries and hamburgers.Fast food tastes very good.0.6871286202797843doc1[1].similarity(doc2[-2])0.5285203456878662doc1[1].similarity(doc1[-2])0.224704563617706314.6 transformer model in spaCy

nlp_trf = spacy.load('en_core_web_trf')

nlp_trf.pipeline[('transformer',

<spacy_transformers.pipeline_component.Transformer at 0x1dc3cd93100>),

('tagger', <spacy.pipeline.tagger.Tagger at 0x1dc29cd5dc0>),

('parser', <spacy.pipeline.dep_parser.DependencyParser at 0x1dc2b700cf0>),

('attribute_ruler',

<spacy.pipeline.attributeruler.AttributeRuler at 0x1dc53115c80>),

('lemmatizer', <spacy.lang.en.lemmatizer.EnglishLemmatizer at 0x1dc53118380>),

('ner', <spacy.pipeline.ner.EntityRecognizer at 0x1dc417a37b0>)]# Feed an example sentence to the model; store output under 'example_doc'

example_doc = nlp_trf("Helsinki is the capital of Finland.")

# Check the length of the Doc object

example_doc.__len__()7type(example_doc._.trf_data)spacy_transformers.data_classes.TransformerDataThe first item in the tensors list under index 0 contains the output for individual Tokens.

# Check the shape of the first item in the list

example_doc._.trf_data.tensors[0].shape(1, 11, 768)The second item under index 1 holds the output for the entire Doc.

# Check the shape of the first item in the list

example_doc._.trf_data.tensors[1].shape(1, 768)In both cases, the Transformer output is stored in a tensor, which is a mathematical term for describing a “bundle” of numerical objects (e.g. vectors) and their shape.

In the case of Tokens, we have a batch of 1 that consists of 11 vectors with 768 dimensions each.

We can access the first ten dimensions of each vector using the expression [:10].

Note that we need the preceding [0] to enter the first “batch” of vectors in the tensor.

# Check the first ten dimensions of the tensor

example_doc._.trf_data.tensors[0][0][:10]array([[-0.14800896, -0.36132938, -0.52402526, ..., 0.33104226,

-0.1899541 , -0.08459951],

[-1.0568136 , -0.659217 , 0.1645886 , ..., 1.0160642 ,

-1.5546881 , -0.36001885],

[-0.83849084, -0.5810041 , -0.07826854, ..., 0.55888575,

-1.3520969 , -0.37117025],

...,

[-0.33014834, -0.22176161, -0.00310517, ..., 2.5474327 ,

0.8233686 , -1.045297 ],

[-0.7981953 , -1.5335865 , -0.16577926, ..., 1.4347023 ,

-0.6925767 , -0.33236212],

[-0.8362223 , -0.81882554, -1.3890662 , ..., 0.10648382,

0.17524342, -0.29888782]], dtype=float32)spaCy Doc object with 7 Token objects represented by 11 vectors

# Access the Transformer tokens under the key 'input_texts'

example_doc._.trf_data.tokens['input_texts'][['<s>',

'H',

'els',

'inki',

'Ġis',

'Ġthe',

'Ġcapital',

'Ġof',

'ĠFinland',

'.',

'</s>']]14.7 中文NLP例子

14.7.1 自定义词典

import spacy

nlp = spacy.load('zh_core_web_sm')

doc = nlp('调整给水,注意给水流量与蒸汽流量相匹配,注意过热度,保证主蒸汽温度不超限。')

token_list = [f"{i}\t{token.text}\t{token.is_stop}" for i, token in enumerate(doc)]

print("\n".join(token_list))0 调整 False

1 给水 False

2 , True

3 注意 True

4 给 True

5 水流量 False

6 与 True

7 蒸汽 False

8 流量 False

9 相匹配 False

10 , True

11 注意 True

12 过 True

13 热度 False

14 , True

15 保证 False

16 主蒸 False

17 汽温度 False

18 不 True

19 超限 False

20 。 Trueproper_nouns = ['给水流量','蒸汽流量','过热度','主蒸汽']

nlp.tokenizer.pkuseg_update_user_dict(proper_nouns)

doc = nlp('调整给水,注意给水流量与蒸汽流量相匹配,注意过热度,保证主蒸汽温度不超限。')

token_list = [f"{i}\t{token.text}\t{token.is_stop}" for i, token in enumerate(doc)]

print("\n".join(token_list))0 调整 False

1 给水 False

2 , True

3 注意 True

4 给水流量 False

5 与 True

6 蒸汽流量 False

7 相匹配 False

8 , True

9 注意 True

10 过热度 False

11 , True

12 保证 False

13 主蒸汽 False

14 温度 False

15 不 True

16 超限 False

17 。 True14.7.2 自定义stopwords

from spacy.lang.zh.stop_words import STOP_WORDS

print(STOP_WORDS) # <- set of Spacy's default stop words

for word in ["保证", "超限"]:

STOP_WORDS.add(word) # 增加stop words

lexeme = nlp.vocab[word]

lexeme.is_stop = True{'到了儿', '当口儿', '从古至今', '若', '一直', '尽可能', '…………………………………………………③', '喔唷', '非独', '绝非', '[②g]', '您们', '当场', '一.', '而且', '$', '移动', '当中', '[③c]', '独自', '不起', '正常', '有着', '3', '纵', '都', '据我所知', '之後', '并没有', '拦腰', '既', '说明', '给', '这样', '显然', '就地', '所谓', '间或', '再其次', '全部', '故而', '乌乎', '难得', '叮咚', '宁肯', 'sup', '恰恰相反', '倘若', '至', '瑟瑟', '直到', '临', '往', ',也', '如前所述', 'Lex', 'В', '那时', '加之', 'e]', '依靠', '谁料', '于是', '中间', '与其', '喽', '本着', '大大', '除外', '[②e]', '日益', '及时', '反而', 'Ⅲ', '反映', '朝着', '简直', '余外', '几度', '接着', '略加', '考虑', '孰知', '绝对', '另行', '乃至', '任务', '不外', '背地里', '虽说', '不对', '如常', '周围', '连同', '乒', '趁着', '甚至于', '=', '继之', '抽冷子', '2.3%', '[①⑤]', '举凡', '任', '到头来', '可以', '别的', '及', '漫说', '别处', '才能', '敢', '本', '正如', '让', '不是', '出于', '来着', '[②b]', 'A', '为主', '[-', '按期', '[⑦]', ']', '必须', '几番', '除此之外', '[⑤]]', '从重', '另一方面', '与', '照', '[⑤d]', '么', '看上去', '同样', '到处', '>', '同时', '一切', '旁人', '你是', '取道', '急匆匆', '—', '}', '偶尔', '立即', '怎么样', '明确', '适应', '大家', '不管', '...', '什么', '企图', '扩大', '′|', '话说', '第二', '[④d]', '更加', '居然', '个人', '多少', '`', '坚持', '有及', '专门', '组成', '不止一次', '赶快', '长线', 'exp', '冲', '6', '不限', '相对', '全年', '以为', '不常', '藉以', '如次', '似的', '等到', '咚', '欤', '全体', '何时', '届时', '呆呆地', '5', '那么些', '即如', '常言说', '何处', '反过来说', ')÷(1-', '为什么', '乃至于', '当时', '多次', '这种', '一个', '各自', '还有', '~±', '一次', '清楚', '喀', '嗬', '经常', '〕〔', '吗', '如其', '〕', '只是', '连日来', '这般', '之所以', '必将', '上下', '自', '十分', '][', '②', '!', '适用', '以', '?', '啊', '之类', '较为', '零', '一时', '[④]', '<', '结合', '满足', '自从', '叮当', '两者', '5', '常言说得好', '哪儿', '即', '变成', '个', '传闻', '这会儿', '近几年来', '不只', '哪些', '最后', '亲手', '如期', '种', '别是', '比', '『', '已矣', '过去', '会', '几经', '方能', '若非', '充分', '要不是', '加强', '大力', '‘', '不论', '屡次三番', '[⑤]', '过于', '马上', '且说', '进去', '[⑤b]', '近年来', '#', '首先', '孰料', '[②G]', '要不', '粗', '帮助', '注意', '甚么', '不料', '0', '极端', '<φ', '即使', '倒是', 'sub', 'LI', '应当', '不拘', '或者', '亦', '恰如', '逐渐', '有些', '存心', '实现', '介于', '顷刻', '人民', '起先', '再则', '成年累月', '用来', '向', '照着', '省得', '把', '毫无保留地', '任何', '[①④]', '上', '此外', '12%', '重要', '正在', '依照', '根据', '立地', '多年前', '截然', '≈', '谁知', '再说', '别人', '之前', '过', '除去', '那儿', '并不', '后者', '//', '自打', '<', '[①c]', '或曰', '4', '她们', '坚决', '不变', '广大', '来讲', '伟大', '到底', '庶几', '其中', '毫无', '但凡', '前面', '以至于', '相同', '大面儿上', '倘', '正巧', '倒不如说', '[④e]', '[②⑧]', '仍然', '即是说', '由此可见', '⑦', '该', '不得不', '产生', '切', '掌握', '安全', '不惟', '从古到今', '何必', '怎麽', '...................', '彻夜', '砰', '[①i]', '〔', '咳', '而是', '%', '.', '绝不', '日渐', '[', '豁然', '并排', '只要', '[⑤f]', 'b]', '结果', '几乎', '通常', '谁', '长话短说', '联系', '一起', '最大', '你的', '不仅...而且', '⑤', '×', '阿', '㈧', '的话', '云尔', '<λ', '他们', '还是', '[①⑧]', '其他', '[①②]', ']∧′=[', '而论', '基本', '挨门挨户', '允许', '率尔', '如此', '再次', '}', '很少', '有', '反之则', '一旦', '换句话说', '及至', '好在', '遵循', '7', '遇到', '当即', '较', '不问', '当儿', './', '矣哉', '究竟', '俺', '设若', '二', ',', '吱', '[②j]', '而后', '各', '下列', '6', '嘎嘎', '不够', '所', '充其量', ')', '不再', '[②②]', '2', '长此下去', '拿', ';', '多多少少', '竟', '近来', '梆', '屡次', '就是', '然则', '除此而外', '|', '替', '并', '尤其', '臭', '尽然', '犹且', '故意', '决不', '单单', '引起', '见', '趁早', '主要', '所以', '次第', '而外', '积极', '就是说', '奈', '以来', '互相', '难道说', '敞开儿', '岂', '没有', '吧', '除却', '从不', '可好', '单', '可能', '昂然', '我是', '罢了', '多么', '或多或少', '内', '当真', '更进一步', '较之', '最近', '实际', '就要', '有的是', '几', '范围', '多亏', '由是', '固然', '非但', '迅速', '只怕', '看见', '构成', '腾', '之一', '维持', '5:0', '当前', '立时', '下面', '此次', '或是', '我', '中小', '川流不息', '一些', '不下', '有所', ')、', '谨', '[①⑦]', '从优', '保持', ':', '规定', '朝', '今', '对方', '恰恰', '欢迎', ',', '[①f]', '顷刻间', '--', '就此', '怪不得', '八成', '只', '至于', '乘', '只限', '呀', '以故', '若是', '.数', '管', '极力', '也', '岂非', '陈年', '之后', '乘虚', '动辄', '凑巧', '[③g]', '其', '没奈何', '刚巧', '再', '纵使', '亲身', '重新', '大凡', '暗中', '不足', '至若', '来', '然後', '即或', '大不了', '别管', '《', '很', '③]', '也就是说', '等等', '∈[', '除此以外', '仅仅', '不消', '哪天', '当地', '嘻', '诸位', '怕', '沿', '=', '呗', '小', '纯粹', '嘎', '[②d]', '三番两次', '陡然', '甚至', '经', '为何', '_', '乘势', '不已', '归齐', '》),', '甫', '致', '後来', '某些', '¥', '乃', '它们', '我的', '一番', '保管', '饱', '从新', '暗自', '能', '要是', '有著', '连连', '以至', '严格', '大事', '兮', '进来', '*', '除了', '不外乎', 'R.L.', '彼', '的', '也是', '具有', '先後', '转贴', '倘然', '因了', '#', '切勿', '古来', '不特', '趁势', '自身', '凭', '呜呼', '得到', '%', '偶而', '啐', '蛮', '上去', '将要', '彼此', '而又', '可', '哪怕', '初', '突然', '哗', '某个', '她的', '开始', '’‘', '却', '八', '…', '[①①]', '不时', '[②]', '接连不断', '以后', '反过来', '普遍', '是否', '哎', '(', '并且', '起', '甚而', '快要', '紧接着', '交口', '尽管', '以外', '转动', '巴巴', '已', '所有', '方便', '表示', '奋勇', '累次', '不比', '则', '恐怕', '所幸', '共同', '或则', '战斗', '他人', '不光', '得天独厚', '许多', '傥然', '者', '[⑩]', '最好', '[②⑤]', '据悉', '大概', 'A', '才', '行动', '从而', '[③F]', '的确', '数/', '进入', '尔后', '别说', '屡', '请勿', '[⑥]', '具体说来', '截至', '⑥', '岂但', '又', '简而言之', '串行', '因着', '限制', '从', '对于', '多', '待', '_', '再者说', '所在', '设或', '顿时', '并不是', '何以', '着呢', '放量', '同', '向使', '[①⑥]', '丰富', '不要', '不止', '~', '即令', '顷', '不独', '何', '率然', '兼之', '牢牢', '.一', '诚然', '打开天窗说亮话', '不由得', '那里', '喂', '说来', '大体上', '具体来说', '日复一日', '因为', '待到', '这次', '嗳', '为什麽', '加入', '呸', '[③h]', '~+', '然后', '互', '[②B]', '猛然', '着', '[①a]', '迟早', 'γ', '[①D]', '更', '替代', '附近', '这就是说', '要么', '大多数', '完全', '很多', '一面', '2', '庶乎', '咱', '毋宁', '那末', '自己', '当庭', '不仅仅', '以下', '继而', '突出', '暗地里', '〉', '赶', '敢情', '按说', '无论', '连日', '如上', '怎么办', '从今以后', '然而', '属于', '另', '轰然', '一来', '啦', '[③a]', '果真', '殆', '哪里', '六', '其一', '他的', '彼时', '隔夜', '奇', '看到', '好象', '决定', '每当', '齐', '极度', '啊呀', '毫无例外', '一下', '相当', '个别', '[②', '不但', '它的', '只有', '老是', '怪', '呼哧', '呢', '现在', '表明', '和', '倍加', '大约', '起头', '无', '【', '[②⑩]', '嗡', '连', '+', '·', '此后', '分别', '今天', '恰逢', '里面', 'ZXFITL', '往往', '人们', '喏', '那么', '可见', '打', '凝神', '借', '越是', '必', '贼死', '有关', '顷刻之间', '一片', '皆可', '临到', '略微', '[⑧]', '即刻', '对比', '——', '嘿', '尽', '换言之', '"', '^', '尽管如此', '大多', '=″', '各式', '偏偏', '为此', '背靠背', '平素', '与否', '7', '是的', '最後', '看来', '何须', '代替', '前此', 'c]', '将近', '强烈', '碰巧', '先不先', '[①d]', '合理', '鄙人', '有效', '至今', '从严', '以上', '弗', '[②f]', '据此', '人家', '便', '既...又', '咱们', '分头', '[②④', '另悉', '上述', '九', '深入', '直接', '何乐而不为', '有点', '长期以来', '元/吨', '呐', '认识', '此地', '难道', '二话不说', '便于', '特殊', '一致', '在', '方面', '不能不', '您是', '[⑤e]', '五', '原来', '看出', '屡屡', '各级', '又及', '顶多', '假若', '[④c]', '在于', '假如', '差不多', '趁便', '容易', '莫', '绝', '乘隙', '少数', '[②③]', '叫做', '──', '不仅', '何尝', '人', '连声', '倍感', '分期', '赖以', '只当', '诸', '整个', ';', '刚才', '再者', '矣', '[①h]', '为着', '权时', '[①⑨]', '乘机', '哼唷', '&', '前进', '得了', '不少', '以後', ':', '极其', '[①C]', '除此', '对应', '』', '现代', '设使', '够瞧的', '总而言之', '达到', '随着', '儿', '除', '今後', '敢于', '或许', '不得已', '来看', '运用', '宣布', '要', '如是', '冒', '于', '普通', '如此等等', '」', '哇', '一边', '×××', '从小', '简言之', '一样', '?', '颇', '更为', '这边', '常', '纵然', '共总', '挨家挨户', '那个', '尚且', '3', '逢', '七', '作为', '怎奈', '譬喻', '具体', '沿着', '-', '大略', '风雨无阻', '各地', '一', '借以', '挨着', '这些', '[①]', '亲自', '哎呀', '>>', '其实', '避免', '从轻', '成年', '三天两头', '均', '在下', '完成', '这', '不择手段', '1.', '如', '每年', '一一', '不若', '.', '俺们', '以前', '仍', '尽心竭力', '从未', '呵呵', '保险', '[①B]', '先生', '曾', '[①③]', '比及', '[①A]', '不经意', '并非', '局外', '双方', '~', '++', '如何', '不怎么', '仅', '尔等', 'φ', '当下', '反之', '岂止', '该当', '那边', '尽快', '并无', '总是', '嗡嗡', '弹指之间', '独', '︿', '一则通过', '来得及', '绝顶', '*', '这儿', '{', '--', '默默地', '1', '若夫', '$', '获得', '..', '~~~~', '其余', '趁', '年复一年', '反之亦然', '呜', '不免', '转变', '[③e]', '仍旧', '那么样', '带', '以致', '几时', '今后', '[③b]', '末##末', '到', '应用', '自后', '[④b]', '反倒', '下去', '老老实实', '<Δ', '愿意', '从中', '第', '如同', '必定', '它', '良好', '这么些', '啊哈', '随著', '练习', '对', '此处', '比较', '起初', '如若', '不断', '吧哒', '同一', '反倒是', 'φ.', '前后', '多多', '唯有', '因', '▲', '宁', '[④a]', '比方', '促进', '那', '半', '单纯', '例如', '不可', '′∈', '迄', '由于', '假使', '从无到有', '按照', '挨门逐户', '极为', '相对而言', '觉得', '但', '不如', '与此同时', '如上所述', '———', '总之', '倘或', '不得', '方', '尽量', '::', '好', '不满', '密切', '真正', '路经', '适当', '遭到', '格外', '除开', '方才', '本人', '刚', '”', '何苦', ''', '得', '你们', '接著', '总的说来', '他是', '咦', '过来', '。', '四', '为', '非得', '不但...而且', '定', '各个', '且不说', '】', '像', '谁人', '云云', '怎么', '隔日', '被', '上来', '共', '必然', '恰巧', '汝', '哎哟', '而况', '不至于', '各人', '白白', '我们', '哪边', '0', '多多益善', '连袂', '③', '哈', '正值', '一定', '来不及', '不过', '还', '非常', '由此', '8', '出去', '概', '了解', '顺', '出来', '如果', '正是', '什麽', '极了', '只消', '挨个', '处在', '难说', '哗啦', '自个儿', '■', '开外', '千', '处处', '上升', '尽心尽力', '需要', '[②h]', '自各儿', '纯', '以期', '可是', '诸如', '啥', '已经', '上面', '恰似', '一般', '特点', '全力', '相等', '是以', '论说', '与其说', '及其', '後面', '}>', '不会', '不能', '精光', '不然', '历', '!', '忽然', '巴', '不管怎样', '从早到晚', '离', '匆匆', '既是', '始而', '啪达', "'", '理应', '它们的', '因此', '咧', '不定', '传', '凡是', '经过', '竟而', '使', '受到', '这一来', '那会儿', '总的来看', '莫如', '由', '白', '则甚', '那般', '……', '自家', '本身', '1', '眨眼', '如今', '归', '有时', '一方面', '竟然', '意思', '据称', '无宁', '多年来', '=[', '必要', '莫若', '是不是', '鉴于', 'a]', '形成', '惟其', '每天', '或', '哈哈', '别', '|', '全都', '不胜', '全面', '......', '何妨', '于是乎', '显著', '万一', '据说', '→', '却不', '趁机', '甚或', '从来', '复杂', '联袂', '0:2', '不同', '从头', '认为', '不久', '每', '不一', '前者', '下', '但是', '〈', '向着', '反手', '总的来说', '={', '您', '不大', '另一个', '起来', '当然', '这时', '知道', '凭借', '他', '行为', '即若', '多数', '逐步', '一则', '继后', '大体', '巨大', '相似', '这麽', '猛然间', '忽地', '赶早不赶晚', '啷当', '出', '倘使', '⑨', '类如', '略', '全身心', '哪年', '能够', '另外', '开展', '能否', '她是', '尔尔', '[③d]', '相应', '也好', '先后', '从速', '此中', '举行', '沙沙', '挨次', '穷年累月', '不敢', '另方面', '哟', '[②①]', '随', '似乎', '基本上', '这么点儿', '凡', '针对', '[②⑦]', '日见', '[', '下来', '老', '既然', '莫不然', '而已', '各种', '极大', '吓', '己', '争取', '每每', '略为', '大都', '不巧', '@', '达旦', '对待', '大抵', '伙同', '不知不觉', '其它', '大量', '呼啦', '莫非', '目前', '每个', '故此', '其后', '亲口', '[⑨]', '相反', '断然', '故', '反应', '是', '常言道', 'Δ', '看起来', '”,', '采取', 'μ', '千万', '要求', '随后', 'Ψ', '大张旗鼓', '今年', '即便', '们', '准备', '这么', '了', '部分', '一转眼', '此', '距', '这么样', '最高', '后面', '愤然', '取得', '此间', '而言', '重大', '哉', '叫', '据', '二来', '使用', '[②c]', '姑且', '立', '来自', '借此', '特别是', '左右', '论', '总结', '=-', '使得', '以及', '嗯', '’', ')', '默然', '[②⑥]', '曾经', '理当', '缕缕', '日臻', '差一点', '要不然', '从此', '通过', '固', '迫于', '(', '随时', '那麽', '到目前为止', '<<', '综上所述', '②c', '[⑤a]', '为止', '那些', '不尽然', '归根到底', '大', '不', '比如说', '窃', '莫不', '慢说', '果然', '巩固', '千万千万', '而', '不得了', '其二', '有的', '犹自', '防止', '纵令', '关于', '社会主义', '切不可', '虽然', '三番五次', '4', '具体地说', '明显', '各位', '最', '://', '9', '从宽', '看', '就算', '呃', '8', '有力', '这点', '唉', '来说', '出现', '分期分批', '失去', '[*]', '哼', '按理', '大举', '就是了', '加上', '啊哟', '极', '既往', '》', '起首', '加以', '不迭', '它是', '毫不', '不曾', '近', '依', '此时', '虽', '一何', '一天', '心里', '甭', '比起', '何况', '满', '且', '惯常', '处理', '毕竟', '广泛', '等', '抑或', '从事', '比照', '并没', '较比', '恍然', '集中', '不亦乐乎', '譬如', '咋', '矣乎', '边', '后', '到头', '决非', '因而', '严重', '以便', '呵', '还要', '立刻', '那样', '不怕', '-[*]-', '本地', '任凭', '不必', '进行', '不成', '顺着', '<±', '不可开交', '也罢', '些', '公然', '靠', '有利', '不力', '虽则', '之', '④', '应该', '勃然', '传说', '亲眼', '从此以后', '快', '真是', '切切', '切莫', '她', '打从', '起见', '非特', '不日', '光是', '不单', '不可抗拒', '说说', '[③⑩]', '况且', '将', '不仅仅是', '趁热', '嘛', '宁可', '哪样', '并肩', '根本', '造成', '再有', '就', '不妨', '光', '不尽', '立马', '人人', '般的', '[②i]', '好的', '“', '[]', '高兴', '扑通', '⑧', '乎', '高低', '除非', '⑩', '归根结底', '问题', '.日', '每时每刻', '为了', '①', '按时', 'f]', '遵照', '某某', '策略地', '[①e]', '去', '你', '[③①]', '呕', '当头', '宁愿', '认真', '相信', '用', '∪φ∈', '尔', '彻底', 'ng昉', '>λ', '进步', '每逢', '如下', '尽早', '成为', '怎样', '>', '累年', '召开', '然', ']', '成心', '9', '=☆', '不然的话', '诚如', '得起', '非徒', '时候', '乘胜', '充其极', '哪个', '比如', '跟', '继续', '某', '接下来', '大致', '三', '这里', '难怪', '[①E]', '主张', '地', '-β', '以免', '+ξ', '刚好', '[①g]', '不了', '看样子', '进而', '尽如人意', '-', '&', '{-', '嘘', '[①o]', '得出', '倒不如', '强调', '大批', '/', '后来', '据实', '即将', '存在', '这个', '看看', '、', '若果', '哩', '否则', '嘎登', '无法', '确定', '活', '基于', '/', '做到', '=(', '何止', '按', '二话没说', '@', '嘿嘿', '常常', '当着', '哦', '什么样', '动不动', '[③]', '甚且', '老大', '理该', '+', '当', '焉', '其次', '没', '将才', '℃', '[②a]', '但愿', '全然', '依据', '哪', '↑', '怎', '恰好', '望'}doc = nlp('调整给水,注意给水流量与蒸汽流量相匹配,注意过热度,保证主蒸汽温度不超限。')

token_list = [f"{i}\t{token.text}\t{token.is_stop}" for i, token in enumerate(doc)]

print("\n".join(token_list))0 调整 False

1 给水 False

2 , True

3 注意 True

4 给水流量 False

5 与 True

6 蒸汽流量 False

7 相匹配 False

8 , True

9 注意 True

10 过热度 False

11 , True

12 保证 True

13 主蒸汽 False

14 温度 False

15 不 True

16 超限 True

17 。 Truefor word in ["保证", "超限"]:

STOP_WORDS.remove(word) # 剔除stop words

lexeme = nlp.vocab[word]

lexeme.is_stop = False

doc = nlp('调整给水,注意给水流量与蒸汽流量相匹配,注意过热度,保证主蒸汽温度不超限。')

token_list = [f"{i}\t{token.text}\t{token.is_stop}" for i, token in enumerate(doc)]

print("\n".join(token_list))0 调整 False

1 给水 False

2 , True

3 注意 True

4 给水流量 False

5 与 True

6 蒸汽流量 False

7 相匹配 False

8 , True

9 注意 True

10 过热度 False

11 , True

12 保证 False

13 主蒸汽 False

14 温度 False

15 不 True

16 超限 False

17 。 True14.8 spacy pipe to speed up

处理多个文档时可以用nlp.pipe. It is specifically used to process text as a sequence of strings. This is much more efficient than processing text one by one. If you’re only processing a single text, simply remove the ‘.pipe’ extension. Source: https://spacy.io/usage/processing-pipelines

- save into file

example.py - run in terminal

python example.py

import spacy

texts = [

"Net income was $9.4 million compared to the prior year of $2.7 million.",

"Revenue exceeded twelve billion dollars, with a loss of $1b.",

]*10

nlp = spacy.load("en_core_web_sm")

for doc in nlp.pipe(texts, n_process=4, batch_size=200):

# Do something with the doc here

print([(ent.text, ent.label_) for ent in doc.ents])14.9 spacy教程 / Model

spacy中文教程: https://course.spacy.io/zh/

spacy101介绍: https://spacy.io/usage/spacy-101

spacy model下载: https://github.com/explosion/spacy-models/tags

spacy universe: https://spacy.io/universe

下载MDA文本: 下载